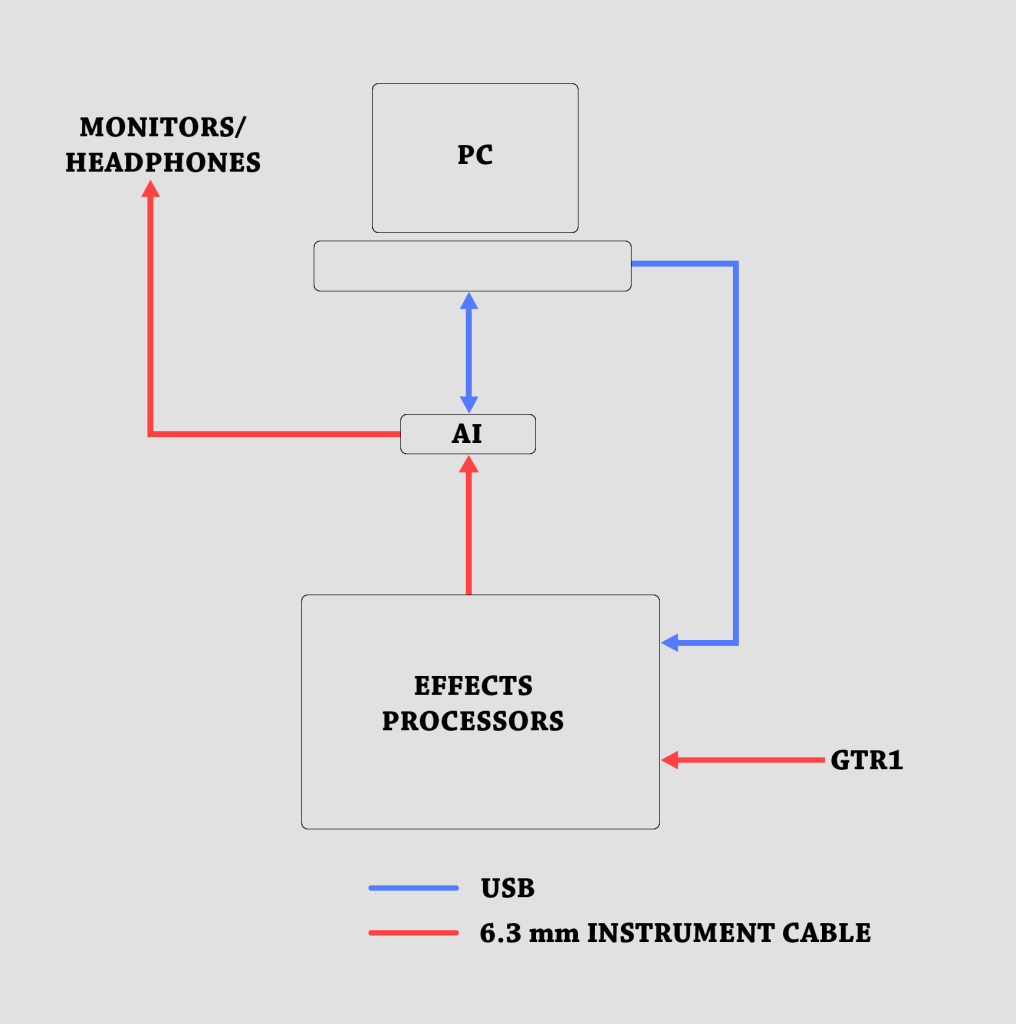

I’ve been working on a music project for the last few months, and in order to be generally efficient and maintain the consistency of the sound of the guitars across different recording sessions, I created a structured ‘pipeline’ for recording. Also this setup exists in my living room, which is in an apartment, so a classic analog setup with amplifiers and mics was out of the question, it would have been much too loud for my neighbors and much too cumbersome for myself. I opted for a solution involving a lot of digital mumbo jumbo. The following diagram shows the setup.

The analog guitar signal first goes through the chain of Effects Processors via an instrument cable.

The processed analog sound goes into the Audio Interface, which is the heart of the system. The AI lets me monitor the guitar signal by sending a direct analog output to speakers or headphones. Purists might cringe at this ‘amp-less’ setup at this stage, but I feel I get a pretty good sound by the final stage.

The AI is also connected to the workhorse – the PC, via a usb connection. The AI samples the analog signal and converts it into a digital signal before sending it to the PC. I use a Digital Audio Workstation(DAW) to read those signals and save them out in a project. The sound of the guitar is further moulded by the DAW with EQ, effects and plugins to get the final sound. The DAW also sends an out signal back to the AI for playback of recorded tracks, which allows me to record over sections that I have already recorded. There is also a USB connection into one of the modules in the effects chain, which allows me to control the module through my PC and help me save tone presets.

The project contains several different tones that need to sound the same every time I record. Every detail of the few tones used in the project is saved out in a document, which contains information about levels of knobs, effects presets, guitar string type, choice of picks etc.

So far the setup has been pretty reliable and has worked out well for everything I’ve recorded.